As organisations accelerate AI adoption, a familiar pattern is emerging: security teams – often the CISO – are increasingly asked to own or coordinate AI governance. That outcome is not an accident. Security leaders already operate across departmental boundaries, manage data inventories, run cross-functional programs and are trusted by executives and boards to solve hard, systemic problems. AI initiatives are inherently cross-disciplinary, data-centric and integrated into product and vendor ecosystems, so responsibility naturally flows toward teams that already do that work. This operational reality creates an opportunity: security can (and should) move from firefighting to shaping safe adoption practices that preserve value and reduce harm.

In this blog I outline key strategies on how to be successfully in leading AI governance initiatives in your organisation.

The core governance mindset shift: from blocking to enabling safe adoption

The ship has sailed: people and teams will use AI. Blocking adoption is largely futile and often counter-productive. The right posture for security is facilitation: enable safe, responsible use by embedding controls and governance into architecture, development pipelines, procurement and day-to-day workflows. That means shifting from saying “no” to saying “here’s how we make it safe.”

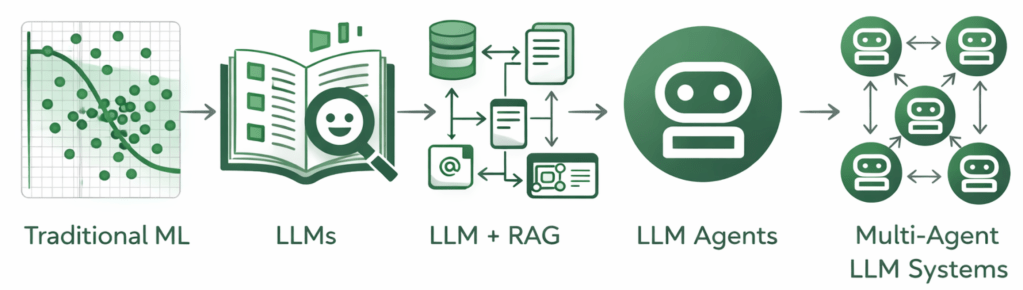

Agentic AI and blast radius

Agentic systems (AI that acts on behalf of users) amplify traditional risks because they require access rights to external tools and personal data e.g. calendars, contact lists, wallets, file systems and more. The more autonomy you grant an agent, the larger its permission set and the bigger the blast radius when it misbehaves. Practical consequences:

- Agents create new privacy and exfiltration channels: a compromised or coerced agent can leak addresses, payment credentials or documents. Some agent features explicitly warn that signed-in agents can access sensitive data and perform actions on behalf of users.

- Plugin/third-party integrations introduce complex trust chains and prompt-injection-like threats that may lead to unauthorised actions or data leakage.

- Public rollout of agentic assistants has prompted urgent privacy warnings and broader debate about the tradeoffs between convenience and security.

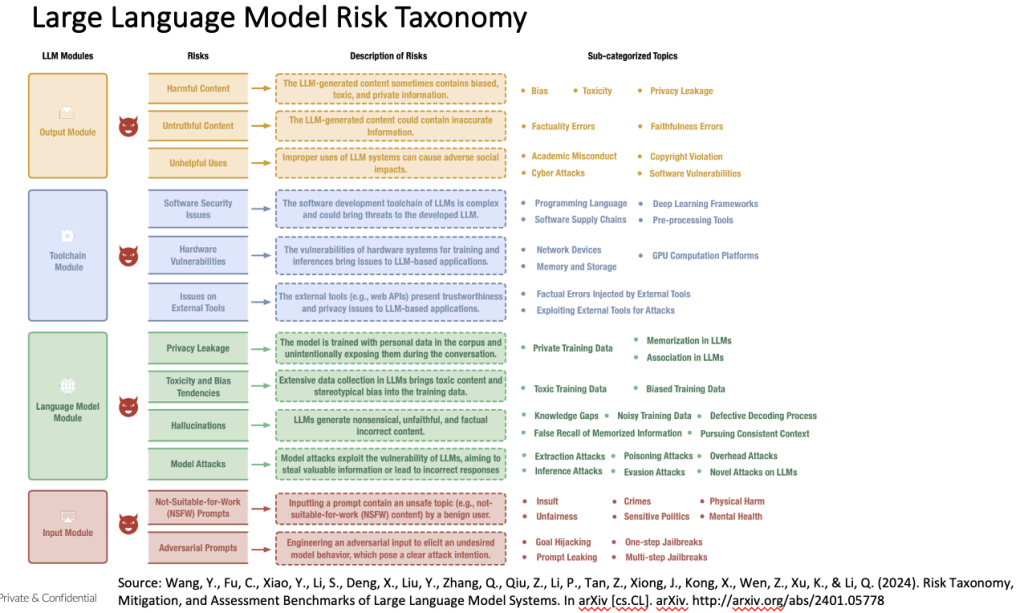

Identify AI risks specific to your business case

The MIT AI Risk Repository is invaluable for AI risk management. Below is an example of a my preferred risk taxonomy.

When deploying AI in a SaaS model, concentrate on controlling inputs and outputs; the remaining layers are generally managed by the provider under the shared responsibility model.

Practical governance architecture: four pillars with engineering detail

Use a risk-centric lifecycle approach – Govern, Map, Measure, Manage – anchored to organisational accountability. This maps to the NIST AI Risk Management Framework and is operationally compatible with MITRE’s AI maturity model for cross-functional alignment. Start small and iterate: a light self-assessment against these frameworks uncovers gaps and creates a roadmap for capability building.

Concrete engineering and program controls:

- Embed approvals in workflows

Integrate AI approvals into product planning tools, CI/CD gates, procurement checklists and vendor onboarding rather than relying on extra meeting cycles. - Treat AI like SaaS and supply chain risk

Apply existing third-party security reviews and extend them to model provenance, API access, data flows and client-side privacy expectations. - Permission contracts and least privilege

Require agents to request narrowly scoped, time-limited tokens with explicit purpose bindings. Design permission grants as attestable contracts (purpose, scope, expiry, auditable token). - Observability-first design

Instrument agents with structured telemetry: inputs, decision traces, API calls, tokens used, confidence scores and decision rationale. Observability is essential to diagnose misbehaviour, conduct forensics and enable continuous evaluation. - Evaluation and QA pipelines

Implement automated test suites, static analysis for generated code, adversarial testing and red-team scenarios. All AI-generated code should be reviewed and run through SAST/DAST where applicable. - Fail-safe behavioural controls

Enforce sandboxing, rate limits, transaction thresholds (human approval above X value), and hard kill switches for autonomous agents. - Telemetry and accountability

Preserve immutable logs for actions taken by agents (who authorised the token, what was requested, what was executed) to reconstruct incidents and enforce accountability. - Privacy posture and user education

Update privacy notices to explain how AI interacts with data, provide user-level consent controls and run outreach to raise AI literacy before new agent features are rolled out.

Governance for the regulatory and legal reality

Expect a patchwork of AI, security, IP and privacy laws. Practical advice:

- Keep legal in the loop early and often: prioritise agility so you can re-prioritise to comply with finalised regulations.

- Treat AI features as changes to your supply chain: document third and fourth-party dependencies and contractually require security/privacy attestations.

- Maintain continuous horizon scanning: regulatory alerts, high-profile incidents, and emergent litigation will change priorities quickly.

A persistent myth and the pragmatic alternative

Myth: “We must have a human in the loop for everything.”

Reality: Human-in-the-loop does not scale for many agentic use cases. For high-risk, sensitive, or transactional operations you can and should require human approval; for lower-risk or high-velocity workflows, rely instead on well-engineered rule sets, telemetry, automated evaluation, and narrowly scoped policies that preserve accountability. In short: use human-in-the-loop strategically, not as a universal bandage. I discuss specific use cases in this blog.

Operational steps you can do this quarter

- Run a light MITRE AI maturity self-assessment to identify capability gaps and prioritise quick wins.

- Inventory imminent AI product roadmaps and vendor plans for the next 2–4 quarters to anticipate required permissions and data flows.

- Add AI checkpoints to existing artefact gates: product requirements, procurement, security review and CI/CD.

- Require “permission manifests” from product teams for any agentic feature (what data, what APIs, who is the owner, risk rating).

- Implement immediate observability guardrails for any agent prototype (structured logs + replayable traces).

Practical governance patterns worth testing

- Permission-broker architecture – central service that mediates agent requests for access, issuing purpose-bound, auditable tokens and enforcing time-limited scopes. This isolates apps from credential sprawl and simplifies revoke/forensic workflows.

- Agent sandboxes and staged escalation – give agents capabilities in a read-only sandbox first; automatically escalate to broader permissions only after passing defined validation gates (simulated tasks, privacy checks, human review).

- “Why did it do that?” trace artefacts – require agents to emit compact, machine-readable rationales for decisions (feature inputs and top contributing evidence). This reduces firefighting time and improves root cause analysis.

- AI feature inoculation – run “scripted misuse” scenarios as part of release testing (prompt injection, social engineering) and measure immunity.

The most underestimated AI risk for 2026

Designing and deploying agents without observability and continuous evaluation – unleashing autonomous systems whose decisions you cannot explain, monitor or diagnose – is the single most underestimated AI risk going into 2026.

Bonus point: building AI features that nobody asked for wastes engineering effort and creates new risk surfaces. Start from business needs and drivers; only then add autonomy.

AI governance is not a single team’s job. Security is well-placed to coordinate, but success depends on embedding governance into product development, procurement, legal review and operational tooling.

Resources

- The MIT AI Risk Repository (a living catalogue of documented AI risks) is invaluable for threat mapping and to understand multi-agent risks and incident examples. AI Risk Repository

- MITRE’s AI Maturity Model provides language to align cross-functional stakeholders and measure progress. MITRE

- NIST’s AI RMF (Govern, Map, Measure, Manage) gives an operational risk-management frame that integrates well into governance programs and policy design. NIST Publications

These resources complement each other: MITRE gives you the organisational language and maturity levers, NIST provides the operational functions and playbook actions and MIT’s repository supplies concrete risks and incident precedents.

1 Comment