If you work for or (even better) co-founded a tech startup, you are already busy. Hopefully not too busy to completely ignore security, but definitely busy enough to implement one of the industrial security frameworks, like the NIST Cybersecurity Framework (CSF). Although the CSF and other standards are useful, implementing them in a small company might be resource intensive.

If you work for or (even better) co-founded a tech startup, you are already busy. Hopefully not too busy to completely ignore security, but definitely busy enough to implement one of the industrial security frameworks, like the NIST Cybersecurity Framework (CSF). Although the CSF and other standards are useful, implementing them in a small company might be resource intensive.

I previously wrote about security for startups. In this blog, I would like to share some ideas for activities you might consider (in no particular order) instead of implementing a security standard straight away. The individual elements and priorities will, of course, vary depending on your business type and needs and this list is not exhaustive.

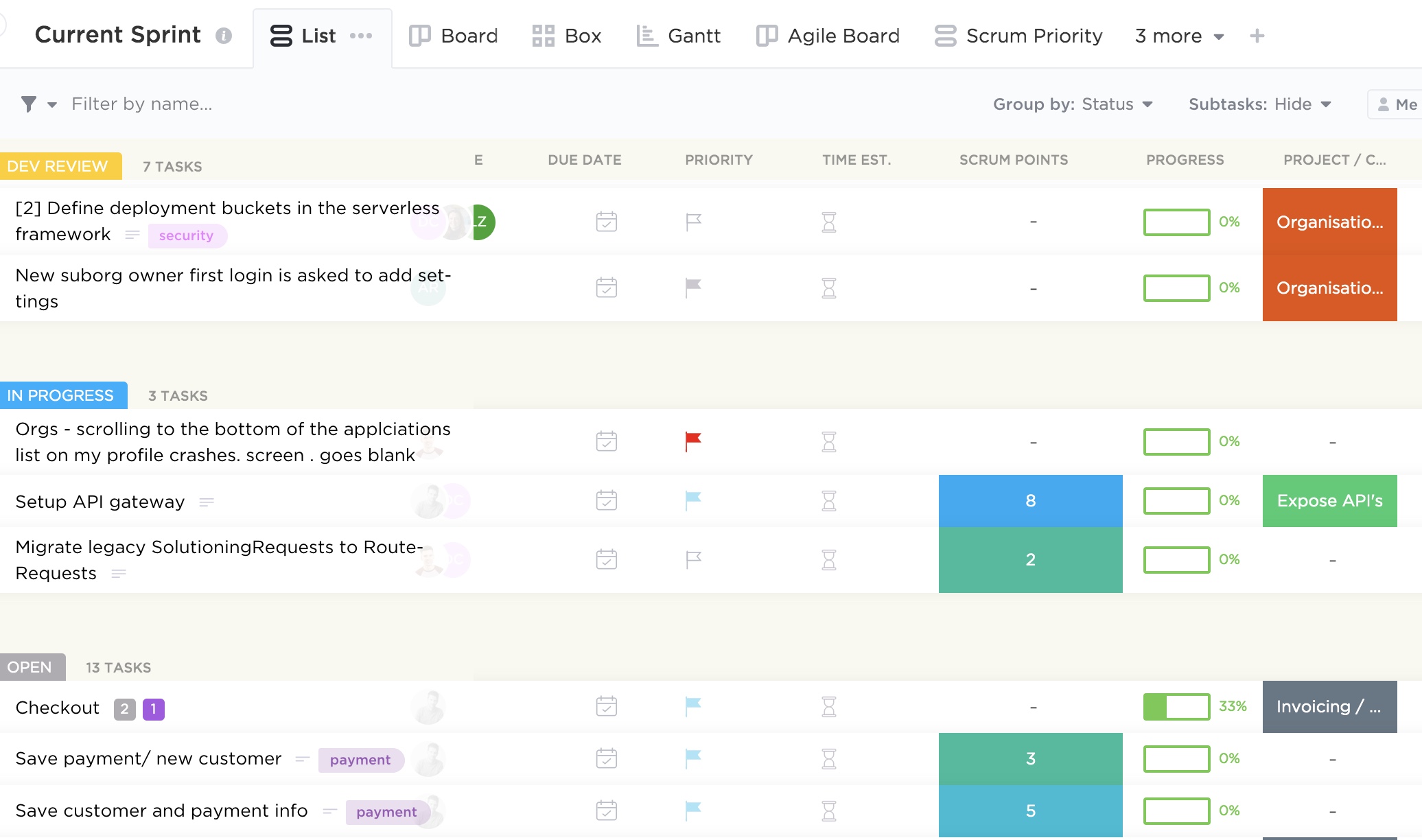

Product security

Information security underpins all products and services to offer customers an innovative and frictionless experience.

- Improve product security, robustness and stability through secure software development process

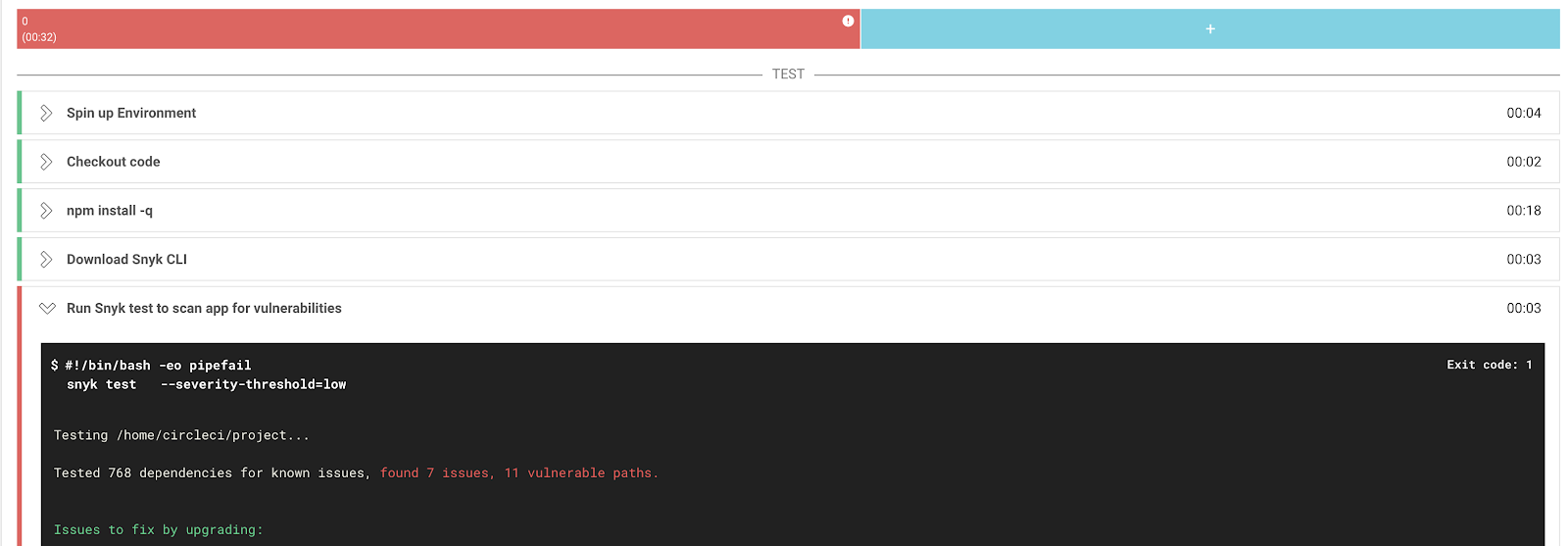

- Automate security tests and prevent secrets in code

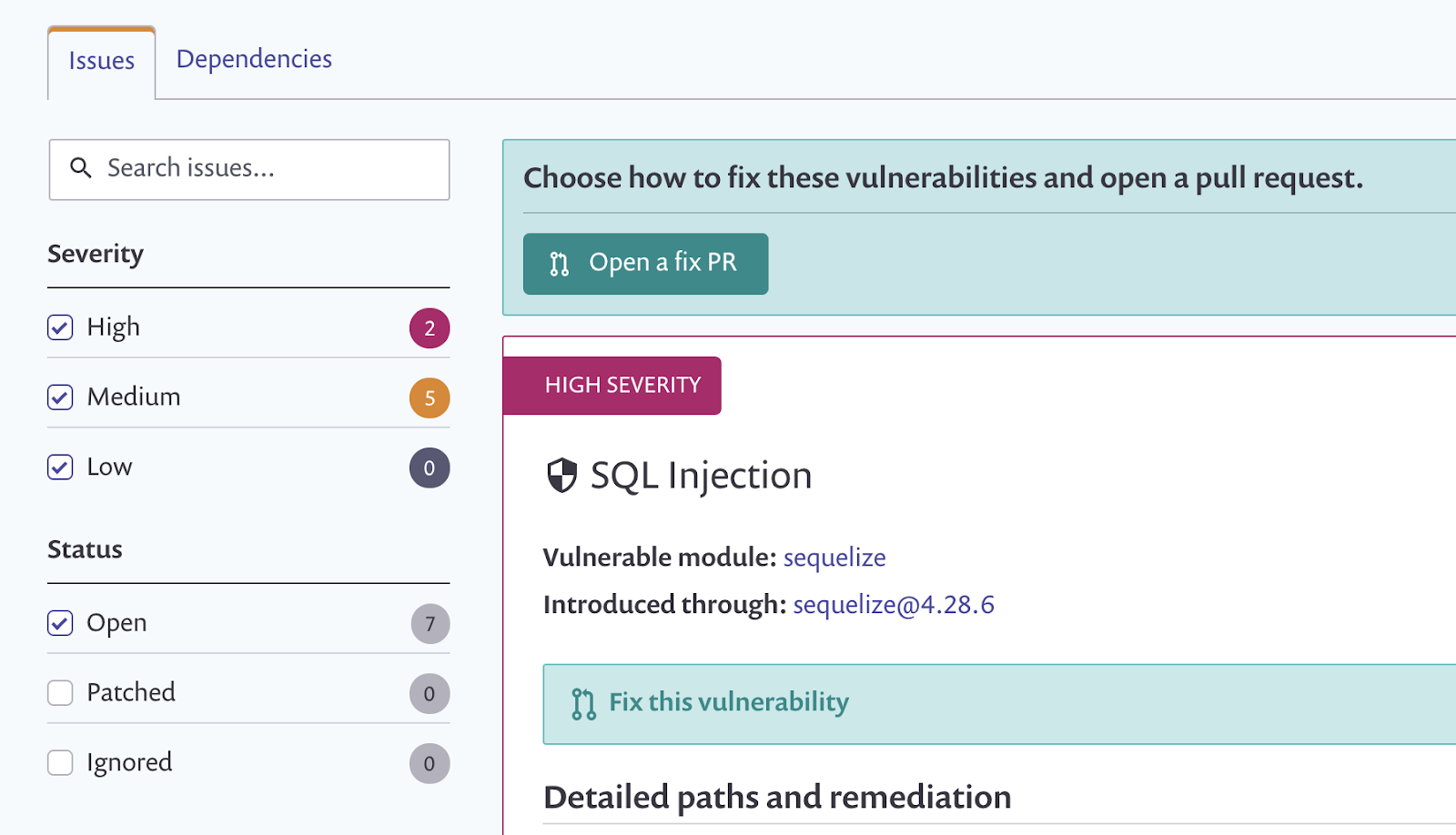

- Upgrade vulnerable dependencies

- Secure the delivery pipeline

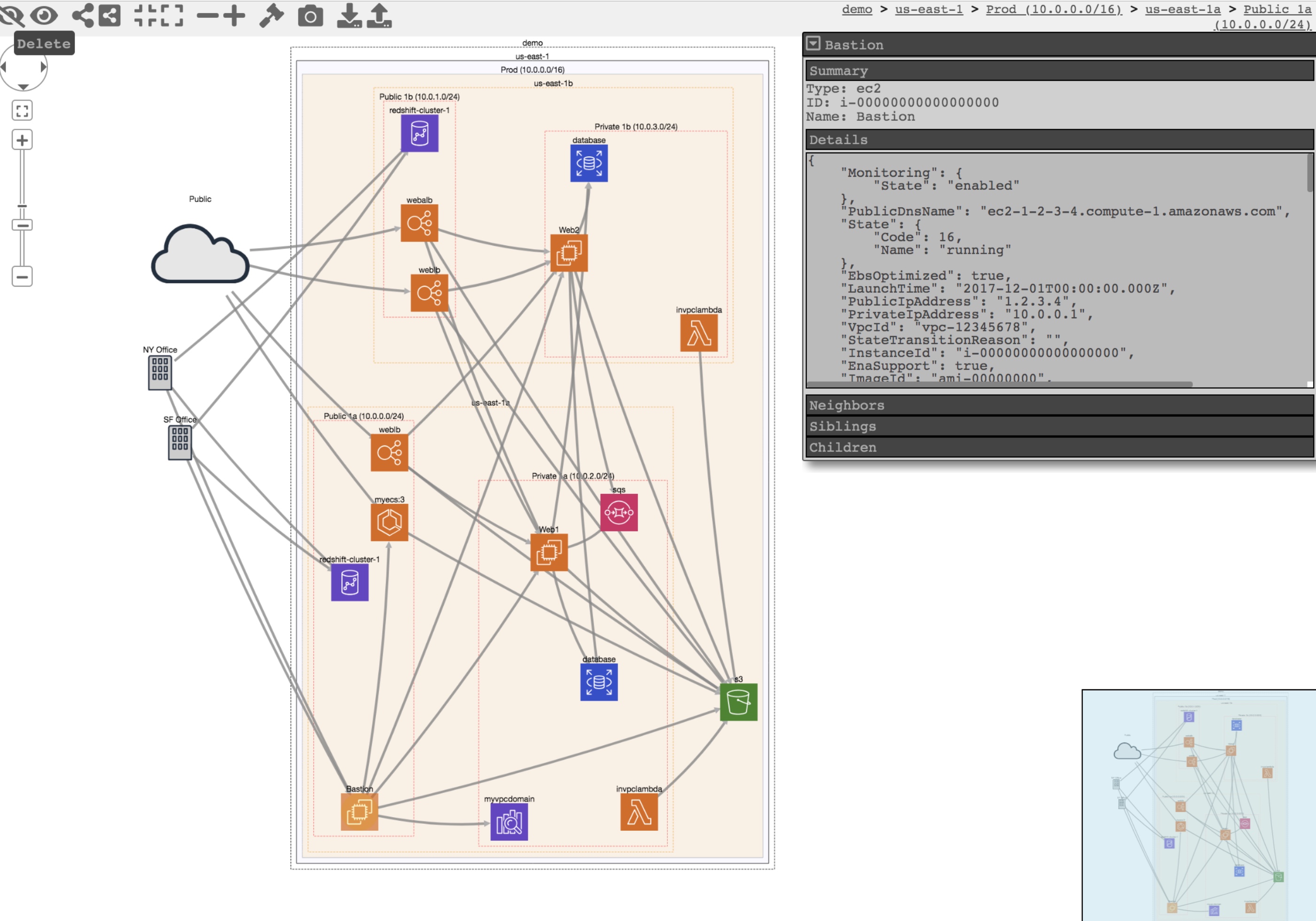

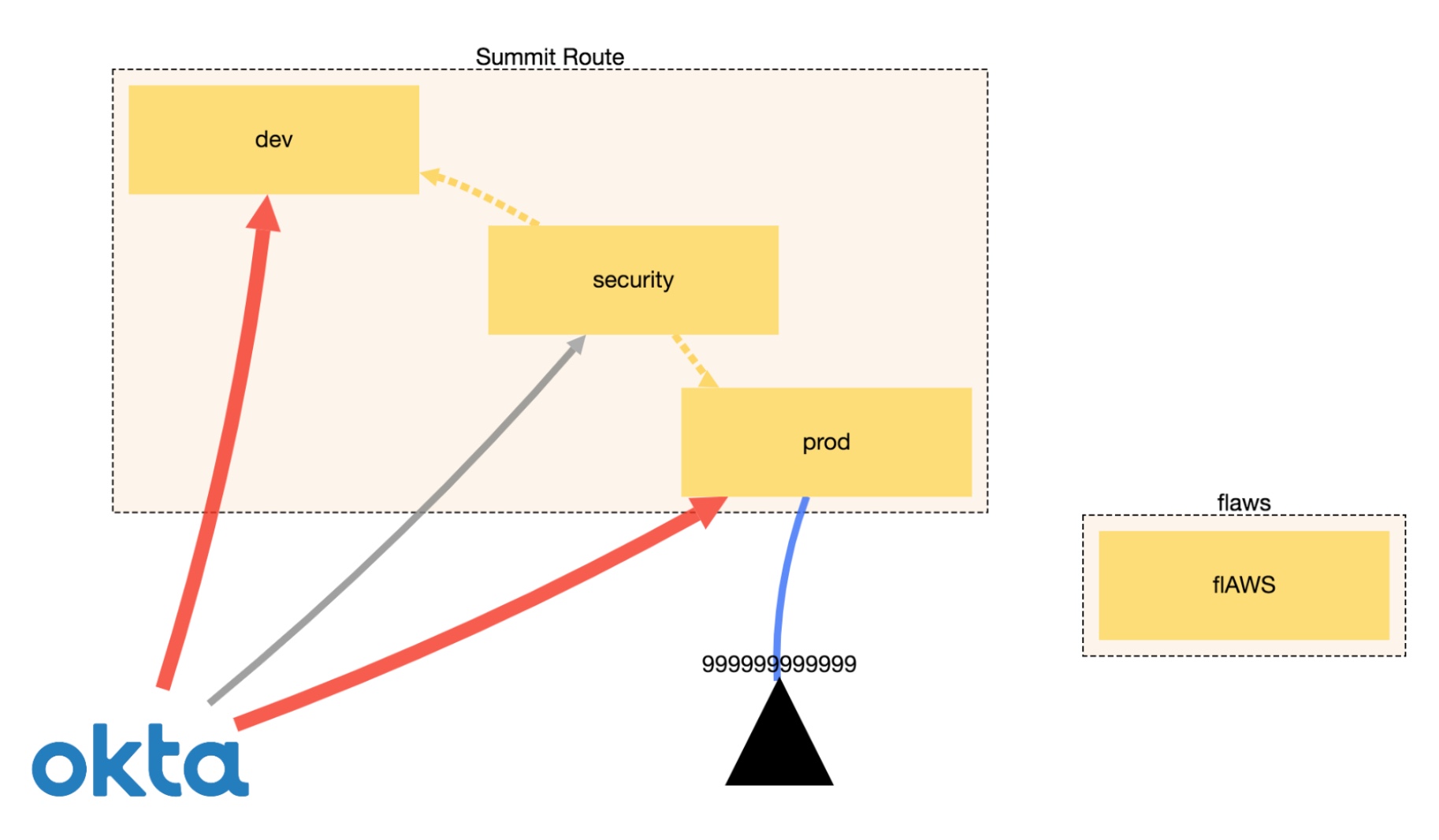

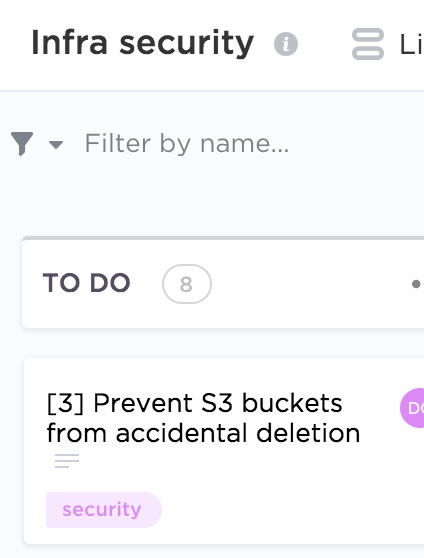

Cloud infrastructure security

To deliver resilient and secure service to build customer trust.

- Harden cloud infrastructure configuration

- Improve identity and access management practices

- Develop logging and monitoring capability

- Reduce attack surface and costs by decommissioning unused resources in the cloud

- Secure communications and encrypt sensitive data at rest and in transit

Operations security

To prevent regulatory fines, potential litigation and loss of customer trust due to accidental mishandling, external system compromise or insider threat leading to exposure of customer personal data.

- Enable device (phone and laptop) encryption and automatic software updates

- Make a password manager available to your staff (and enforce a password policy)

- Improve email security (including anti-phishing protections)

- Implement mobile device management to enforce security policies

- Invest in malware prevention capability

- Segregate access and restrict permissions to critical assets

- Conduct security awareness and training

Cyber resilience

To prepare for, respond to and recover from cyber attacks while delivering a consistent level of service to customers.

- Identify and focus on protecting most important assets

- Develop (and test) an incident response plan

- Collect and analyse logs for fraud and attacks

- Develop anomaly detection capability

- Regular backups of critical data

- Disaster recovery and business continuity planning

Compliance and data protection

To demonstrate to business partners, regulators, suppliers and customers the commitment to security and privacy and act as a brand differentiator. To prevent revenue loss and reputational damage due to fines and unwanted media attention as a result of GDPR non compliance.

- Ensure lawfulness, fairness, transparency, data minimisation, security, accountability, purpose and storage limitation when processing personal data

- Optimise subject access request process

- Maintain data inventory and mapping

- Conduct privacy impact assessments on new projects

- Data classification and retention

- Vendor risk management

- Improve governance and risk management practices

Image by Lennon Shimokawa.

I wrote for

I wrote for