Security failures are rarely a technology problem alone. They’re socio-technical failures: mismatches between how controls are designed and how people actually work under pressure. If you want resilient organisations, start by redesigning security so it fits human cognition, incentives and workflows. Then measure and improve it.

Think like a behavioural engineer

Apply simple behavioural-science tools to reduce errors and increase adoption:

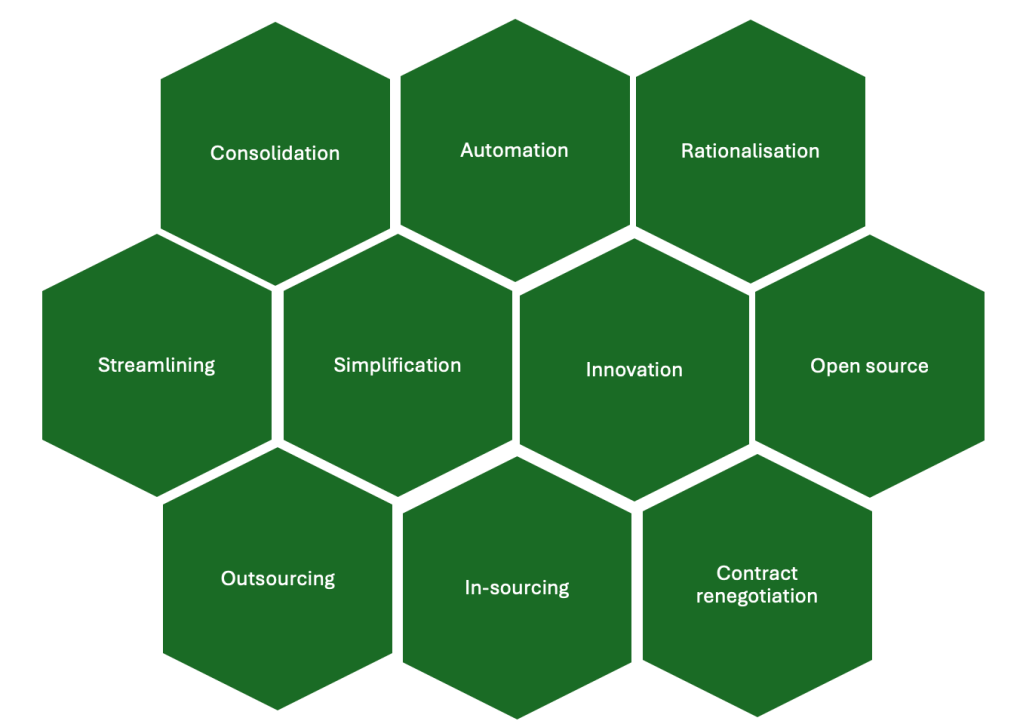

- Defaults beat persuasion. Make the secure choice the path of least resistance: automatic updates, default multi-factor authentication, managed device profiles, single sign-on with conditional access. Defaults change behaviour at scale without relying on willpower.

- Reduce friction where it matters. Map high-risk workflows (sales demos, incident response, customer support) and remove unnecessary steps that push people toward risky workarounds (like using unapproved software). Where friction is unavoidable, provide fast, well-documented alternatives.

- Nudge, don’t nag. Use contextual micro-prompts (like in-app reminders) at the moment of decision rather than one-off training. Framing matters: emphasise how a control helps the person do their job, not just what it prevents.

- Commitment and incentives. Encourage teams to publicly adopt small security commitments (e.g. “we report suspicious emails”) and recognise them. Social proof is powerful – people emulate peers more than policies.

Build trust, not fear

A reporting culture requires psychological safety.

- Adopt blameless post-incident reviews for honest mistakes; separate malice investigations from learning reviews.

- Be transparent: explain why rules exist, how they are enforced and what happens after a report.

- Lead by example: executives and managers must follow the rules visibly. Norms are set from the top.

Practical programme components

- Security champion network. One trained representative per team. Responsibilities: localising guidance, triaging near-misses and feeding back usability problems to the security team.

- Lightweight feedback loops. Short surveys, near-miss logs and regular champion roundtables to capture usability issues and unearth workarounds.

- Blameless playbooks. Clear incident reporting channels, response expectations, and public, learning-oriented postmortems.

- Measure what matters. Track metrics tied to risk and behaviour.

Metrics that inform action (not vanity)

Stop counting clicks and start tracking signals that show cultural change and risk reduction:

- Reporting latency: median time from detection to report. Increasing latency can indicate reduced psychological safety (fear of blame), friction in the reporting path (hard-to-find button) or gaps in frontline detection capability. A drop in latency after a campaign usually signals improved awareness or lowered friction.

Always interpret in context: rising near-miss reports with falling latency can be positive (visibility improving). Review volume and type alongside latency before deciding.

- Inquiries rate: median number of proactive security inquiries (help requests, pre-deployment checks, risk questions). An increase usually signals growing trust and willingness to engage with security; a sustained fall may indicate rising friction, unresponsiveness or fear.

If rate rises sharply with no matching incident reduction, validate whether confusion is driving questions (update docs) or whether new features need security approvals (streamline process).

- Confidence and impact: employees’ reported confidence to perform required security tasks (backups, secure file sharing, suspicious email reporting) and their belief that those actions produce practical organisational outcomes (risk reduction, follow-up action, leadership support).

An increase may signal stronger capability and perceived efficacy of security actions. While a decrease indicates skills gaps, tooling or access friction or perception that actions don’t lead to change.

Metrics should prompt decisions (e.g., simplify guidance if dwell time on key security pages is low, fund an automated patching project if mean time to remediate is unacceptable), not decorate slide decks.

Experiment, measure, repeat

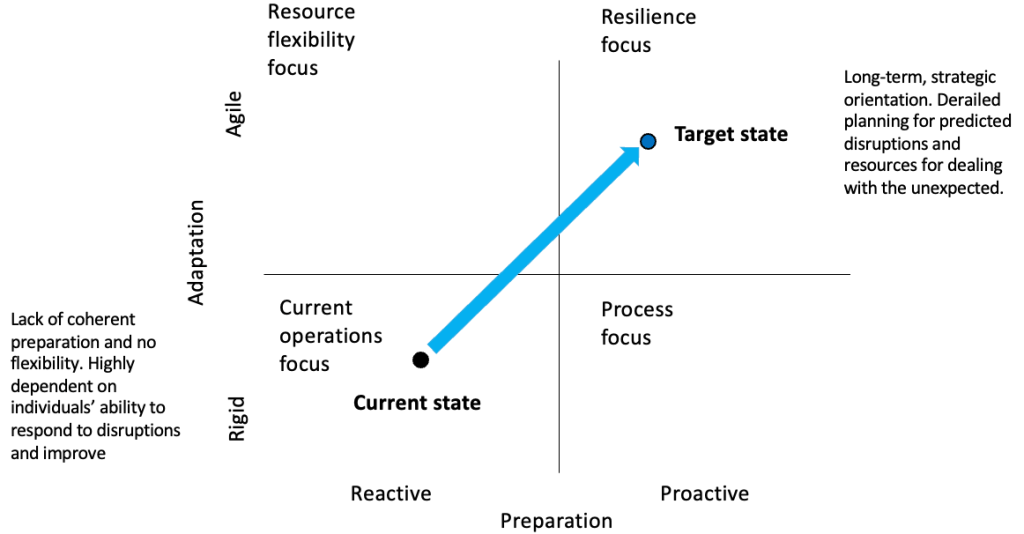

Treat culture change like product development: hypothesis → experiment → measure → adjust. Run small pilots (one business unit, one workflow), measure impact on behaviour and operational outcomes, then scale the successful patterns.

Things you can try this month

- Map 3 high-risk workflows and design safer fast paths.

- Stand up a security champion pilot in two teams.

- Change one reporting process to be blameless and measure reporting latency.

- Implement or verify secure defaults for identity and patching.

- Define 3 meaningful metrics and publish baseline values.

When security becomes the way people naturally work, supported by defaults, fast safe paths and a culture that rewards reporting and improvement, it stops being an obstacle and becomes an enabler. That’s the real return on investment: fewer crises, faster recovery and the confidence to innovate securely.

If you’d like to learn more, check out the second edition of The Psychology of Information Security for more practical guidance on building a positive security culture.